This is likely one of those obscure posts that maybe three other people on the planet are interested in, but after the effort it took to get this working, I just had to write it up. It’s worth noting though that while this configuration is specific to an application we use, a good portion of the below has to do with troubleshooting permissions on files and services, which could impact any number of applications or configurations. Hopefully some of this info is useful to a wider audience. If nothing else it’s good documentation for my department’s records.

If you just want to get PaperCut print archiving working, you can jump straight to Making the Necessary Changes.

Background and Troubleshooting

We use PaperCut to monitor print usage. A while back they added a print archiving feature that allows users to review and retrieve copies of printed documents through the PaperCut interface. At the time I looked into the configuration and it seemed rather complicated – plus we had no specific reason to implement it – so I put it on the back burner. Fast forward to a few days ago and the time had come to get it working.

Like many organizations, we have a centralized print server cluster handling all of our networked printers, but we still have dozens of local printers. The way PaperCut works in this case is that each PC with an attached printer is a “secondary print server” that reports back to the PaperCut application server. And that’s where things get complicated.

In this configuration, PaperCut needs a network share to hold the print archive and the PaperCut service (“PCPrintProvider”) that gets installed on each PC needs the ability to copy the spool files to that share. For whatever printers are local to the server with the print archive share itself, this is easy. In our case, I added a SAN LUN to our print cluster, changed the print-provider.conf file to point the archive to the local drive letter for that LUN, restarted the PCPrintProvider service, and was done. All our networked printers were archiving in a matter of minutes. (I’m deliberately glossing over a few steps that are nicely detailed in the PaperCut documentation, and if you’re hoping to setup archiving and are already lost, don’t bother going any further. It gets worse.)

But here’s the rub when it comes to all those local printers…The PCPrintProvider service by default runs as “Local System,” an account which by design has no ability to access a network share. To resolve this, the credentials under which that service runs need to be changed to a domain account, to which we can grant permissions to the share. OK, that’s not so bad. But the PaperCut documentation makes passing reference to the real problem: “Create a new domain account with access to the [archive] share […] and full management rights of print spooler on the local machine.”

That was a new one for me; I’ve never run across anything that required changing permissions on the print spooler, and searching online didn’t turn up anything either. In retrospect I probably should have contacted PaperCut support, but the documentation gives the distinct impression they don’t want to provide too much info about making changes that could cause your system to become seriously FUBAR’d. Plus it never hurts to learn something when figuring out a problem like this.

Anyway, one thing at a time. Starting with the easy and obvious stuff, I:

- Created a domain user as a PaperCut service account

- Granted that user permissions to the print archive (UNC) share

- Gave that user account the “Log on as a Service” right through Group Policy

- Changed the PCPrintProvider service to log on as the domain user account

- Copied the updated print-provider.conf file, reflecting the archive share

The PCPrintProvider service restarted without any problem, but not only did I not have archiving, I was no longer getting the basic reporting of print jobs to PaperCut. I wasn’t terribly surprised to be honest because I hadn’t yet done anything to address the need for “full management rights of [the] print spooler.” Time to look at that, but before I go any further I should point out that in the interest of time, and in light of not having documented every single troubleshooting step I took along the way, I am skipping over a lot of detail about my failed attempts to fix this. The important stuff is here though.

One of the first things I tried was to change the service account for the Print Spooler service (“spooler”) to my PaperCut service account. Once this was done the spooler would throw an Access Denied error and refuse to start. I then gave the service account full control of the spooler service (which can be done through group policy or via subinacl.exe) but the service would still not start, so it went back to its default setting of “Local System.”

Next, I launched Process Monitor to see if I could turn up any Access Denied messages. The one that immediately stood out was “write” access being denied on the PaperCut NG\providers\print\win directory; the exact path varies based on an x86 or x64 system and seemingly which version of PaperCut was/is originally installed. I gave the service account full control of this directory, at which point the print-provider.log file began to update.

The log file then identified a registry key that the application couldn’t access either: HKLM\System\CurrentControlSet\Control\Print. I changed those permissions and restarted the PCPrintProvider service. Still no job logging.

At least print-provider.log was pretty clear in showing what the error was:

Unable to open service control manager, when trying to get handle to service: Spooler – Access is denied. (Error: 5)

I temporarily gave the PaperCut service account admin privileges on the local PC, which did fix things, but I believe in “least user privilege” so adding a local administrator account domain-wide was not something I wanted to do. I removed the admin access and went back to troubleshooting, though I spent way too long trying to track this down and not getting anywhere.

The turning point was when I thought to enable failure auditing of well, everything, which can be done through secpol.msc. Having done this, I restarted the PCPrintProvider service and immediately had a few failures show up in the security log. Unfortunately I didn’t take any screenshots, but the gist of things is that access was being denied to the Service Control Manager itself, not the Print Spooler. (Recall I had earlier given the service account full control of the spooler, so I wouldn’t have expected issues there.)

Searching for info on the error shown led me to this link, which explains exactly how to identify these failures and fix them using sc.exe to edit the permissions on the service control manager. I don’t mean to gloss over this step, as it was THE problem step for us, but the link does a perfectly good job of walking you through the fix and there’s no reason for me to duplicate it here.

Once this was sorted out, job logging started working again and I was now back to working on print archiving. The PaperCut log file was helpful here again, and I ended up needing to make two more changes:

- Granting the service account full control of the local printer(s)

- Granting the service account access to C:\Windows\System32\Spool\Printers so it could access the spool files and copy them to the archive share

Once all that was done, print logging and archiving were both working properly. Finally.

Making the Necessary Changes

Based on my troubleshooting above, it appears the following steps all need to be completed on every PC running as a secondary PaperCut print server:

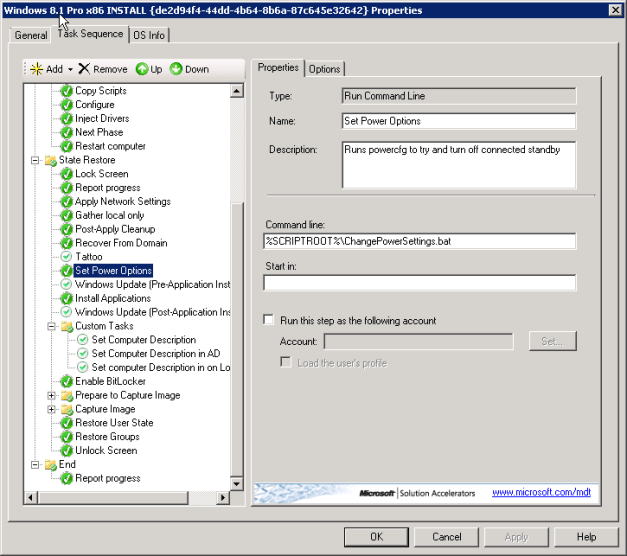

- Stop “PCPrintProvider” service

- Grant permissions to the PaperCut service account to “Log on as a Service”

- Change “Log on As” credentials for “PCPrintProvider” service to a domain user account with access to your print archive share

- Copy new print-provider.conf (which the correct archive path set via UNC) file to the appropriate directory

- Grant PaperCut service account full control on the following directories:

- C:\Program Files\PaperCut NG\providers\print\win (Program Files path may vary)

- C:\Windows\System32\Spool\Printers

- Grant PaperCut service account full control on registry key HKLM\System\CurrentControlSet\Control\Print

- Grant PaperCut service account full control on all local printers

- Grant PaperCut service account full control of the print spooler

- Change ACL on Service Control Manager

- Start “PCPrintProvider” service

Alternatively you can grant your PaperCut service account local admin status on all PCs; that will make things nice and easy if you’re less concerned about security.

Steps 1 through 6 and step 10 should be easy enough to figure out, so I’ll focus on steps 7, 8 and 9.

Step 7: Grant PaperCut service account full control on all local printers

subinacl.exe can be used to do this on a per-printer basis with the following command: (change the parts in italics)

subinacl.exe /noverbose /nostatistic /printer “Printer Name” /grant=domain\useraccount=F

Step 8: Grant PaperCut service account full control of the print spooler

Again, subinacl.exe:

subinacl.exe /SERVICE Spooler /GRANT=domain\useraccount=F

Step 9: Change ACL on Service Control Manager

This is the troublesome one as it requires a few steps itself. Rather than my going through it all, I recommend reviewing this link from networkadminkb.com. You’ll need to determine the SID for your PaperCut service account and may want to review some info on SDDL strings. Make sure when you retrieve the SDDL string for the Service Control Manager that you are working at an administrative command prompt; the output will vary if you are not.

Wrapping Up

It took me quite a while to get this working, and I hope this post helps prevent at least a few people from having to go through the same process. I think the PaperCut installation ought to take care of most of this by itself, as right now print archiving is way more complicated to setup than it should be. Since it’s not, and because we needed to do this on a large number of PCs, I had to script a solution. If you’re interested in the script let me know, and if there’s sufficient demand I’ll clean it up and post it.

Update 8-13-14: Here’s the script. Use at your own risk. It should be sufficiently commented so you can make the necessary changes for your environment.

Please leave any comments or questions you may have. Thanks!