I’ve written before about some of the challenges we’ve run into while implementing ADFS for Office 365. Now that we’re making more use of O365, I decided it was time to add a second ADFS server for redundancy. Given that our existing ADFS server is virtual, I thought it would be quicker to clone it and make the necessary changes rather than install another server from scratch. I was partially right.

As it turns out, cloning an existing ADFS server is pretty easy. Unfortunately it took some time to track down the various changes that needed to be made for everything to work properly, but I’m summarizing the process here in case I can save another admin the effort.

-

Clone the system however you like.

-

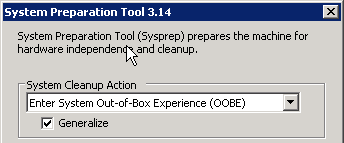

Sysprep the clone (c:\windows\system32\sysprep\sysprep.exe) making sure to check the “Generalize” option, which generates a new SID.

-

After sysprep reboots the system, you will have to go through the initial out-of-box setup again. Reconfigure networking as appropriate and join the clone to your domain.

-

Once this is done, all you have to do to add the clone to the existing federation server farm. This is where I ran into a problem though, and ultimately why I’m posting this info.

On the clone (let’s call it ADFS2 to make things simpler), the “AD FS 2.0 Windows Service” started but trying to access the federation metadata via https://servername/federationmetadata/2007-06/federationmetadata.xml resulted in a “503 Service Unavailable” error. Checking the AD FS 2.0 Log turned up five errors, all of which referenced issues with the certificates used for ADFS signing.

“During processing of the Federation Service configuration, the element ‘serviceIdentityToken’ was found to have invalid data. The private key for the certificate that was configured could not be accessed. The following are the values of the certificate:”

Each error showed a different element but was otherwise the same. In all cases, the errors pointed towards an issue with the ADFS service account being unable to access the private keys for the certificates.

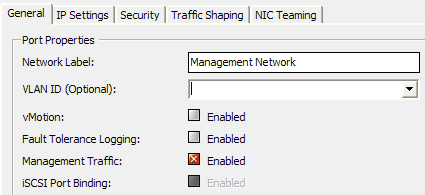

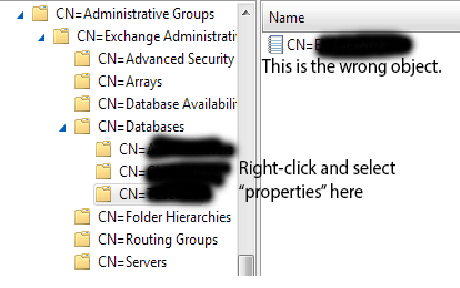

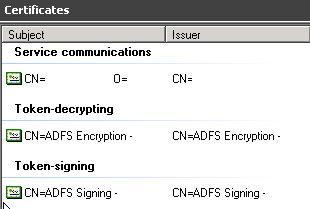

This is where things get a little complicated. ADFS relies on three certificates to make everything work. I’ve redacted a lot in the screenshot below, but you can see the three certificates. During a standard ADFS install, the “Service communications” certificate should be a 3rd party certificate that’s trusted (Verisign, etc.) but the Token-decrypting and Token-signing certificates are typically self-signed.

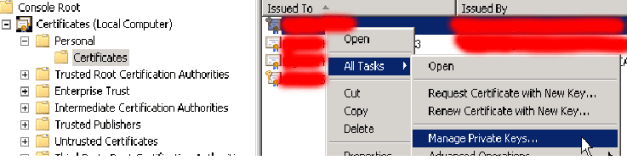

The “Service communications” certificate, being a public cert, is the easiest to address. Open the certificates MMC snap-in and connect to the ADFS computer’s certificate store. Your service certificate should be in the Personal folder. If you right-click the certificate, then select “All Tasks” and then “Manage Private Keys…” you can verify that your ADFS service account has read permission on the private key. In my case, the account appeared to have permission though as it turns out, it did not.

In the process of troubleshooting, I deleted the certificate from ADFS2. I then exported it from ADFS1, including the private key, and re-imported it to ADFS2. Once I did that and checked permissions on the private key (on ADFS2), I ran into something strange…the ADFS service account was missing, and in its place was an unresolvable GUID. I deleted the GUID and re-added the ADFS service account. I restarted the AD FS 2.0 service, and found that the first error pertaining to the serviceIdentity token was gone, but the others were still present.

It took me a while to find the other two certificates. They’re actually in the personal certificate store for your ADFS service user, so you’ll need to log on to the server with your service user’s credentials to access them. Unfortunately, while the certificates console shows a valid private key for both certs, you cannot export that key, nor can you “Manage” it as per above to review or change the permissions.

The final solution actually turned out to be pretty simple. I deleted both certs entirely on ADFS2, then re-ran the ADFS wizard and re-added ADFS2 to the ADFS farm. Doing so recreated the certificates and set whatever permissions were necessary. Problem solved.

Long story short…if I were to do this again, I would delete all three certificates from the cloned system before running the ADFS wizard. I’m not sure if you would still need to manually reset the permissions on the private key for the Service communications certificate, but I’m inclined to think not.

Keep in mind even after adding the additional ADFS server you need to sort out load-balancing so both servers can be used for authentication requests.

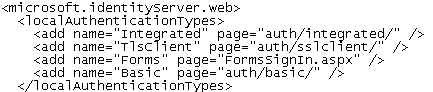

Bonus Tip: If during testing you notice that Chrome, Firefox, or any other non-IE browser repeatedly prompts for credentials, that’s normal. By default the ADFS install will have IWA with Extended Protection enabled, which only works for Internet Explorer. If you need to disable this to support other browsers, you can.